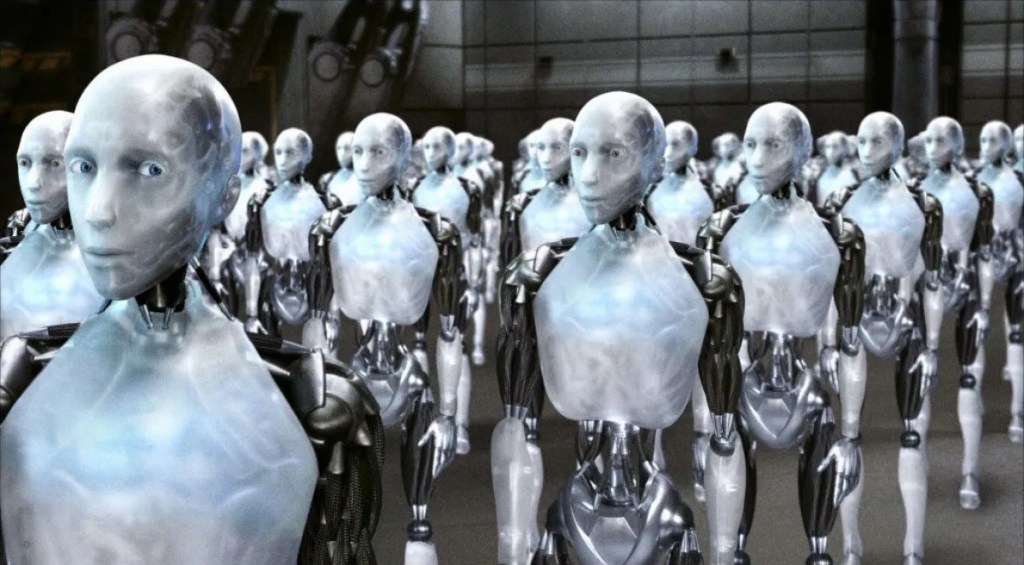

Since the release of the ever-popular tools ChatGPT, Bard, and BingAI, several communities have posed the question, “Will Artificial Intelligence take away our jobs?” The marketing behind these tools has done an excellent job showcasing their incredible ability to process natural human language and provide the user with regurgitated responses based on data models.

I could give you a lecture on how AI is built and the complexity behind these models, but it wouldn’t address the above question. It would be more about how AI has improved over the course of two decades. My simplified answer to “What is AI?” is this: You give a computer data, which it then stores and processes. When you ask the computer a question, it goes back to that data and chooses the best answer based on the available information. If the answer is wrong, you correct the computer (thereby changing the data), and if it is right, you reinforce the correct answer (again, changing the data). This iterative process is repeated countless times until the computer becomes more accurate in answering questions over time.

For example, consider your phone’s autocorrect feature. When I upgrade my iPhone and start texting, my clumsy thumbs will inevitably make spelling mistakes, or I may use my own personal slang/acronyms that the phone identifies as mistakes. In the first few attempts, iOS will autocorrect these mistakes because it “thinks” I made them unintentionally. However, if I continue using the same slang in my messages, the phone will start to learn that it is intentional and should be preserved as is. Remember the infamous “duck” autocorrect? It’s like that, but on steroids.

Another example is your friendly chatbot on a website. When you type something into the chat screen, it analyzes the words and retrieves data from its database that best matches your input.

Tools like ChatGPT and Bard combine autocorrect, chatbot functionality, and data processing on a more advanced level. These tools can debug code for errors, provide in-depth answers based on real-time, hard data, and some tools can even effortlessly edit imperfections in a photo.

With the powerful technology and effective marketing combined, we have a truly remarkable tool along with the influence of mass media, which can sometimes amplify things that are not well understood by non-tech communities. ChatGPT gained popularity because it gives the perception of intelligence and engages users as if they were conversing with another human being. As I mentioned before, it’s really, really cool. It demonstrates how chatbots and data can make artificial intelligence more accessible to the public.

However, the level of attention these chatbots receive has also attracted criticism. I have read articles with titles like “Which roles will AI likely replace?” or “Who will you be after ChatGPT takes your job?” that are simply fear-mongering. As of today, a chatbot is not going to take anyone’s job. Tools like ChatGPT are far from being able to replace jobs entirely, but they can perform specific tasks exceptionally well. They excel at performing tasks but not entire jobs. Jobs entail a range of multiple tasks that require human judgment and nuanced decisions, which cannot be programmed into a bot. Companies are already leveraging AI to perform certain tasks such as writing articles, writing code, and predicting the market, but more often than not, humans need to review the results to avoid spreading misinformation or basic mathematical errors. With today’s technological limitations, it’s at best a form of staff augmentation.

Another reason why I don’t believe AI is a threat to jobs is our own technological limitations. We don’t currently possess sufficient processing power or the right set of physical materials to build technology capable of handling the complex tasks involved in fundamental jobs. I can confidently say that Bard won’t be able to handle situations where a vice president is upset because their Wi-Fi isn’t working, or when a user intentionally clicks on a phishing link. At least not today or in the next 10-15 years. What many of these articles fail to convey is that AI’s knowledge is based on the information we humans provide it with, and with great power comes great responsibility, to borrow a phrase.

Lastly, Artificial Intelligence has been around since the 1950s, and much like the printing press, your car, or even your Nest thermometer, its goal was to automate mundane tasks. It may sound like I’m oversimplifying how awesome and potentially scary AI is, but to some extent, it really is that simple. While we witness and acknowledge that AI can make our lives easier, it can also make our lives worse. If bots start taking our jobs, is it our responsibility to adapt to a new generation of technology, just as our predecessors did? While Schrödinger didn’t live long enough to witness another leap in technology, or even intend his hypothesis to be applied outside of quantum mechanics, AI remains in its box, and its ultimate impact is still unknown.

Leave a comment